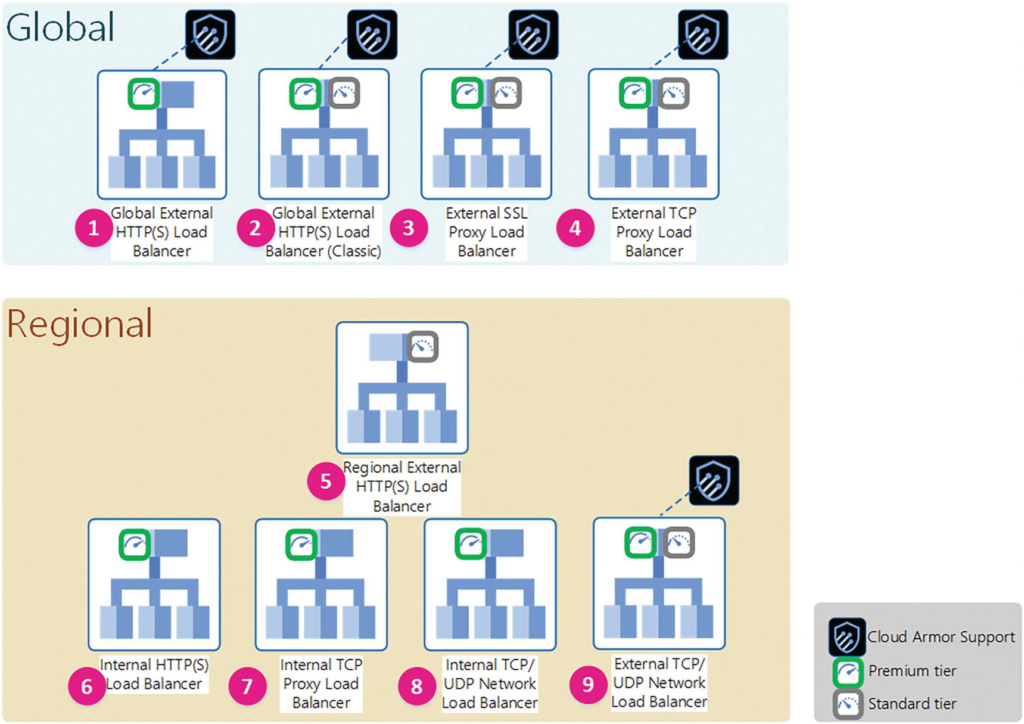

There are nine types of load balancers, global or regional—the former to denote a load balancer with components (backends) in multiple regions and the latter with all components in a single region.

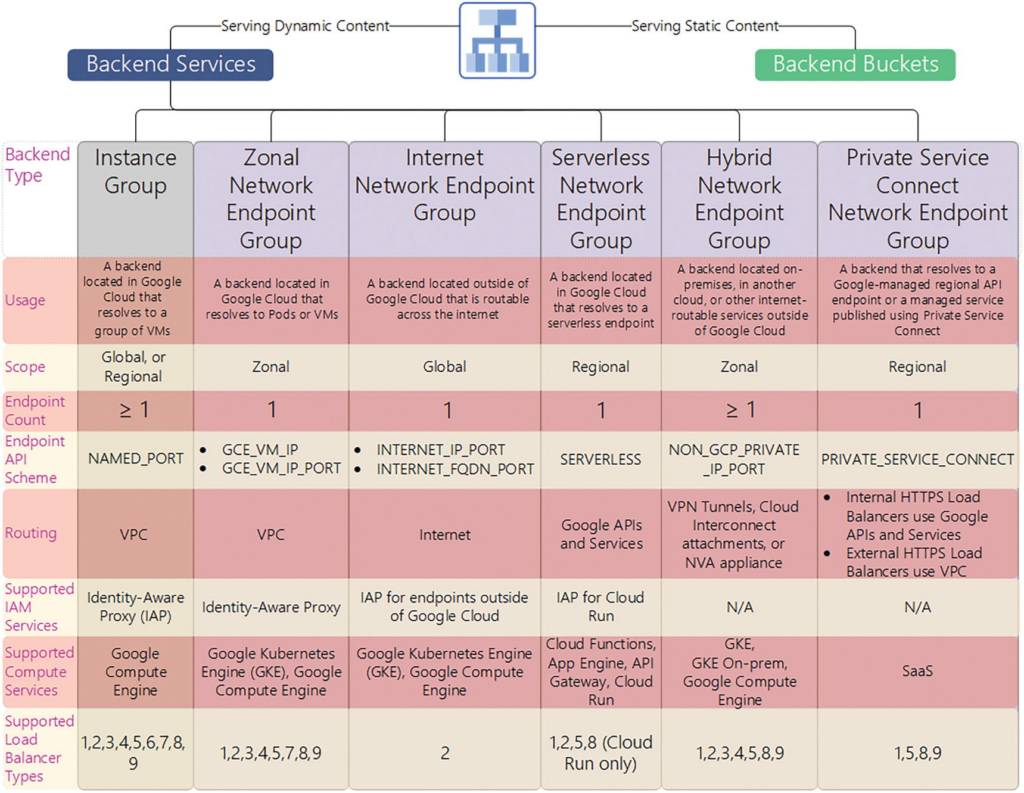

Backend services are the means a load balancer uses to send incoming traffic to compute resources responsible for serving requests.The compute resources are also known as backends, while a backend service is the Google Cloud resource that mediates between the load balancer frontend and the backends.

A number of backend options are available. If the preceding requirements drive you toward an IaaS approach, then managed instance groups (MIGs) are an excellent option for your workload backends. Google Cloud has generalized the “network endpoint group” construct from container-native only to a number of different “flavors” in order to meet the nonfunctional requirements presented by hybrid and multi-cloud topologies.

A managed instance group treats a group of identically configured VMs as one item. These VMs are all modeled after an instance template, which uses a custom image and do not need an external IP address.

network tag allow-health-checks

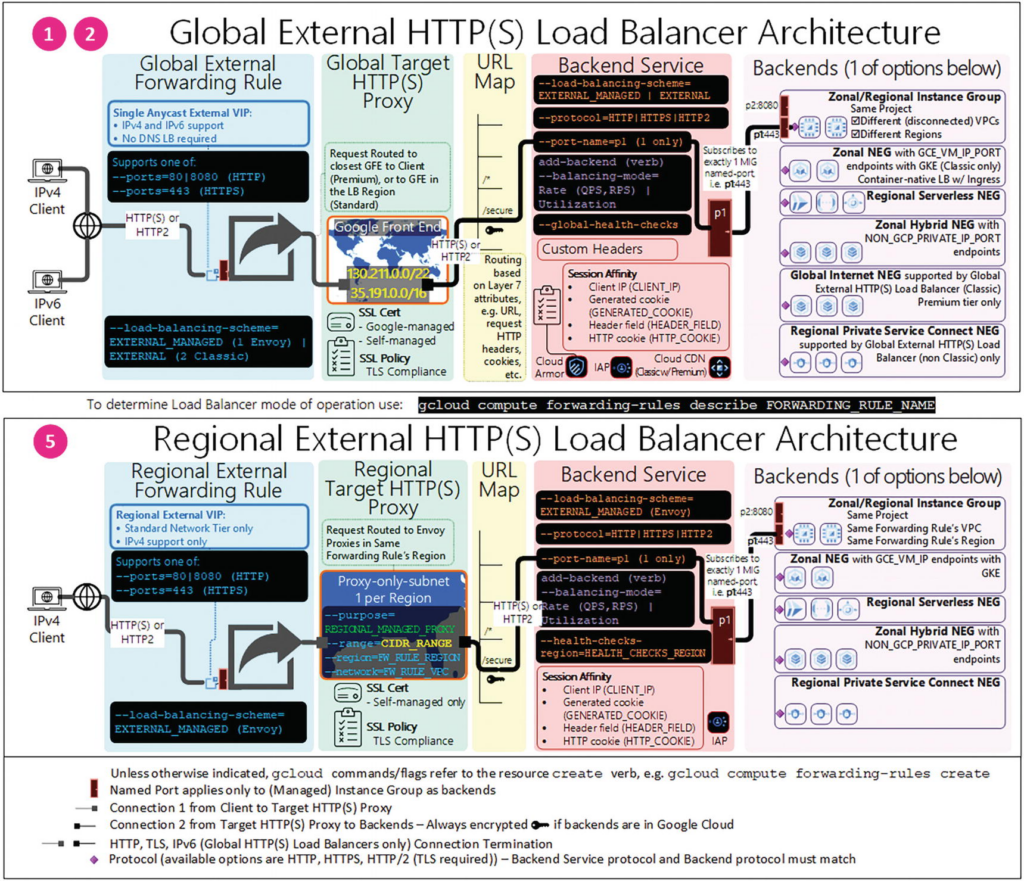

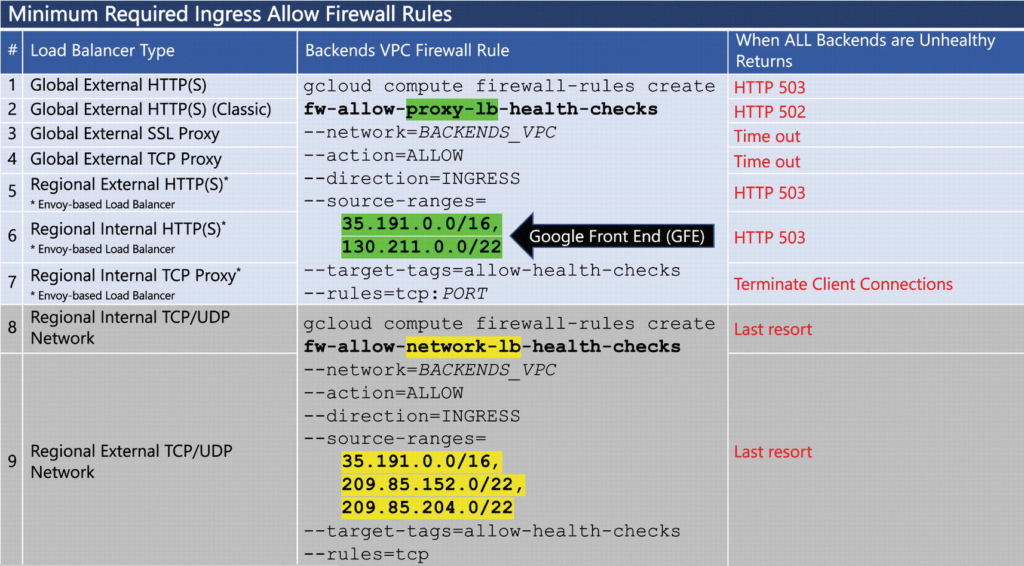

Google Front End (GFE) uses the CIDR blocks: 130.211.0.0/22, 35.191.0.0/16

Forwarding Rule : An external forwarding rule specifies an external IP address, port, and target HTTP(S) proxy

Upon terminating the connection, the target HTTP(S) proxy evaluates the request by using the URL map (and other layer 7 attributes) to make traffic routing decisions.

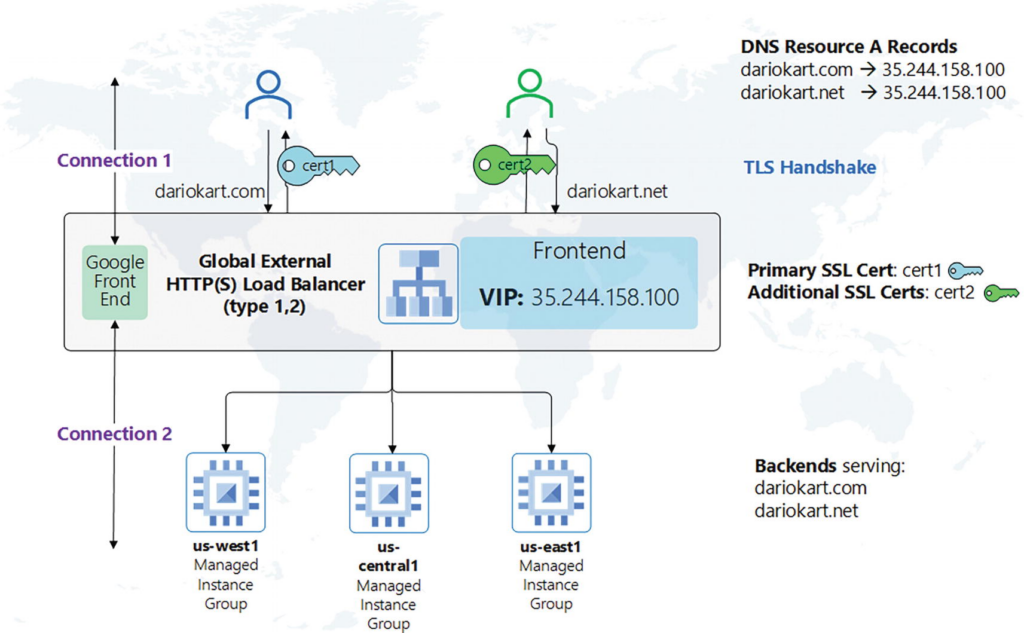

You can configure up to the maximum number of SSL certificates per target HTTP(S) proxy. When you specify more than one SSL certificate, the first certificate in the list of SSL certificates is considered the primary SSL certificate associated with the target proxy.

The target HTTP(S) proxy uses a URL map to decide where to route the new request. The URL map can also specify additional actions, such as sending redirects to clients.

A backend service distributes requests to healthy backends.

Backends are the ultimate destination of your load balancer incoming traffic.

In gcloud, you can add a backend to a backend service using the gcloud compute backend-services add-backend command.

A network load balancer doesn’t have layer 7 (application layer) advanced routing and session-level capabilities like the HTTP(S) load balancers. It is acceptable to have SSL traffic decrypted by your backends instead of by the load balancer. When the backends decrypt SSL traffic, there is a greater CPU burden on the backends.

A network endpoint group (NEG) abstracts the backends by letting the load balancer communicate directly with a group of backend endpoints or services.

The following example creates an HTTP load balancing NEG and attaches four zonal network endpoints to the NEG. The zonal network endpoints will act as backends to the load balancer.

1.Create a network

gcloud compute networks create network-a \

--subnet-mode custom 2.Create a subnet with alias IP addresses:

gcloud compute networks subnets create subnet-a \

--network network-a \

--region us-central1 \

--range 10.128.0.0/16 \

--secondary-range container-range=192.168.0.0/163. Create two vms:

gcloud compute instances create vm1 \

--zone us-central1-c \

--network-interface \

"subnet=subnet-a,aliases=container-range:192.168.0.0/24"

gcloud compute instances create vm2 \

--zone us-central1-c \

--network-interface \

"subnet=subnet-a,aliases=container-range:192.168.2.0/24"4. Create the NEG:

gcloud compute network-endpoint-groups create neg1 \

--zone=us-central1-c \

--network=network-a --subnet=subnet-a \

--default-port=805. Update NEG with endpoints:

gcloud compute network-endpoint-groups update neg1 \

--zone=us-central1-c \

--add-endpoint 'instance=vm1,ip=192.168.0.1,port=8080' \

--add-endpoint 'instance=vm1,ip=192.168.0.2,port=8080' \

--add-endpoint 'instance=vm2,ip=192.168.2.1,port=8088' \

--add-endpoint 'instance=vm2,ip=192.168.2.2,port=8080'6. Create a health check:

gcloud compute health-checks create http healthcheck1 \

--use-serving-port--use-serving-port: Use the port of each endpoint in the network endpoint group.

7. Create the backend service:

gcloud compute backend-services create backendservice1 \

--global --health-checks healthcheck18. Add the NEG neg1 backend to the backend service:

gcloud compute backend-services add-backend backendservice1 \

--global \

--network-endpoint-group=neg1 \

--network-endpoint-group-zone=us-central1-c \

--balancing-mode=RATE --max-rate-per-endpoint=5When you add an instance group or a NEG to a backend service, you specify a balancing mode, which defines a method measuring backend load and a target capacity. External HTTP(S) load balancing supports two balancing modes:

- RATE, for instance groups or NEGs, is the target maximum number of requests (queries) per second (RPS, QPS)

- UTILIZATION is the backend utilization of VMs in an instance group.

9. Create a URL map using the backend service backendservice1:

gcloud compute url-maps create urlmap1 --default-service=backendservice110. Create the target http proxy using the url-map urlmap1:

gcloud compute target-http-proxies create httpproxy1 \

--url-map=urlmap111. Create the forwarding rule and attach it to the newly created target-http-proxy httpproxy1:

gcloud compute forwarding-rules create forwardingrule1 \

--ip-protocol TCP \

--ports=80 \

--global \

--target-http-proxy httpproxy1

Figure 5-4 Load balancer health check firewall rules

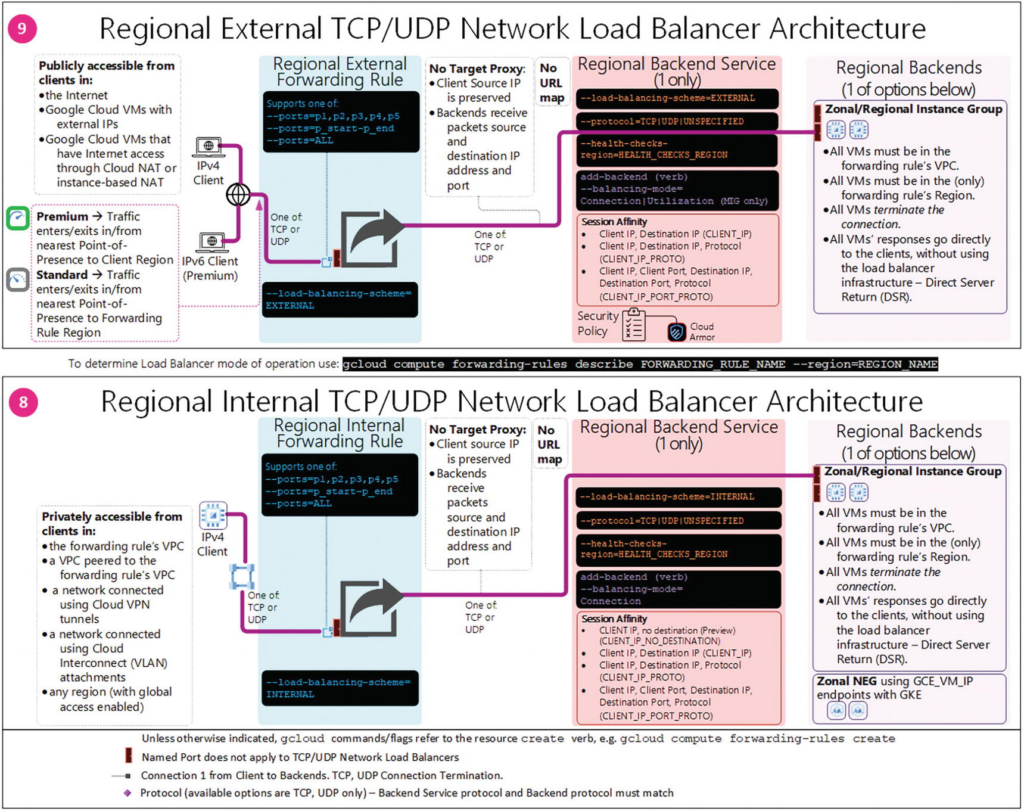

The two TCP/UDP network load balancers (denoted by 8 and 9 in Figure 5-4) are the only nonproxy-based load balancers. Put differently, these load balancers preserve the original client IP address because the connection is not terminated until all packets reach their destination in one of the backends. Due to this behavior, the source ranges for their compatible health checks are different from the ones applicable to proxy-based load balancers (1–7 in Figure 5-4).

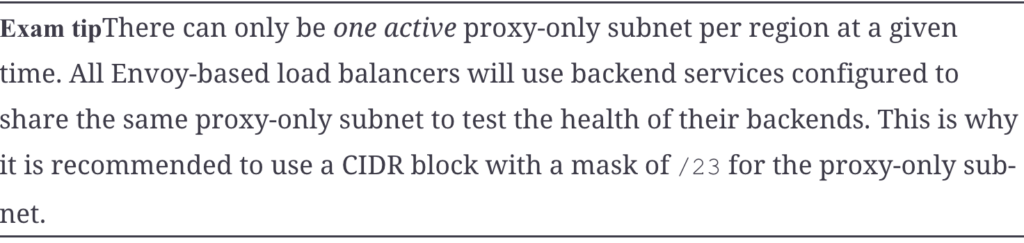

Also, regional load balancers that use the open source Envoy proxy (load balancers 5, 6, 7 in Figure 5-4) require an additional ingress allow firewall rule that accepts traffic from a specific subnet referred to as the proxy-only subnet.

These load balancers terminate incoming connections at the load balancer frontend. Traffic is then sent to the backends from IP addresses located on the proxy-only subnet.

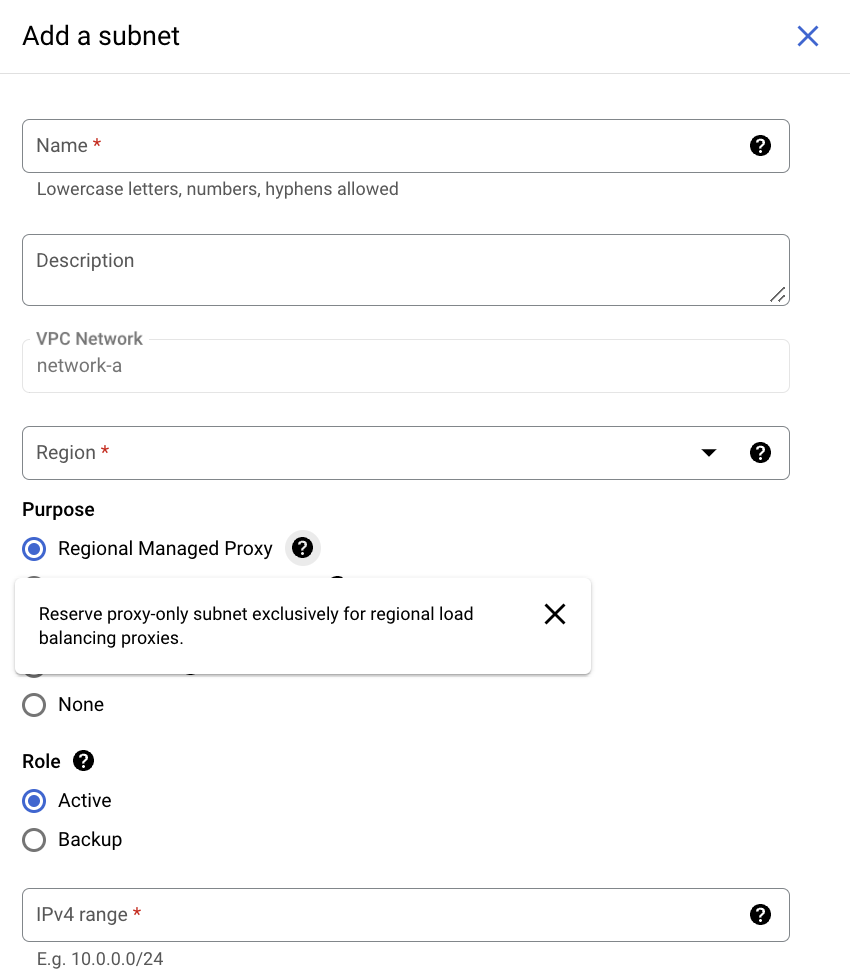

As a result, in the region where your Envoy-based load balancer operates, you must first create the proxy-only subnet.

A proxy-only subnet must provide 64 or more IP addresses. This corresponds to a prefix length of /26 or shorter. We recommend that you start with a proxy-only subnet with a /23 prefix (512 proxy-only addresses) and change the size as your traffic needs change.

gcloud compute networks subnets create proxy-only-subnet \

--purpose=REGIONAL_MANAGED_PROXY \

--role=ACTIVE \

--region=us-central1 \

--network=lb-network \

--range=10.129.0.0/23

Upon creation of the proxy-only subnet, you must add another firewall rule to the load balancer VPC—lb-network in our preceding example. This firewall rule allows proxy-only subnet traffic flow in the subnet where the backends live—the two subnets are different, even though they reside in the load balancer VPC. This means adding one rule that allows TCP port 80, 443, and 8080 traffic from the range of the proxy-only subnet, that is, 10.129.0.0/23.

gcloud compute firewall-rules create fw-allow-proxies \

--network=lb-network \

--action=allow \

--direction=ingress \

--source-ranges=10.129.0.0/23 \

--target-tags=allow-health-checks \

--rules=tcp:80,tcp:443,tcp:8080In the preceding example, the firewall rule targets all VMs that are associated with the network tag allow-health-checks.