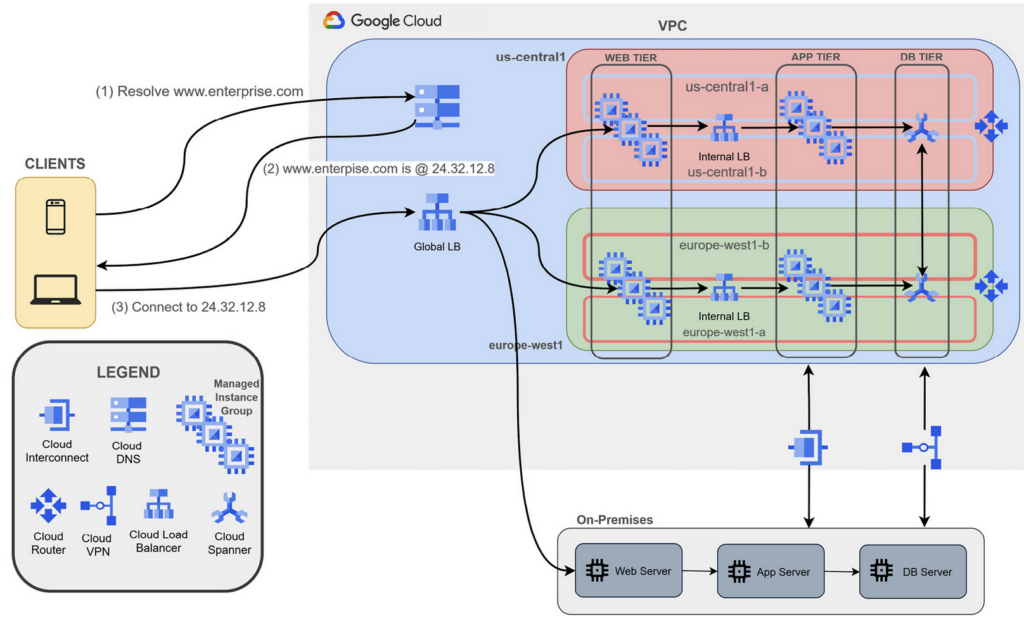

Hot DR scenario for three-tier application in hybrid cloud:

The database tier should include reliability as well. In GCP, this can be achieved with Cloud Spanner, which is a regional managed relational database service. To achieve database synchronization, the application tier must be modified to write consistently to both databases. Dedicated Interconnect and Cloud VPN shoudl work in Active Backup mode. In scenarios where there are workloads in multiple GCP regions, the cloud architect should include one Cloud Router per region in order to reduce latency.

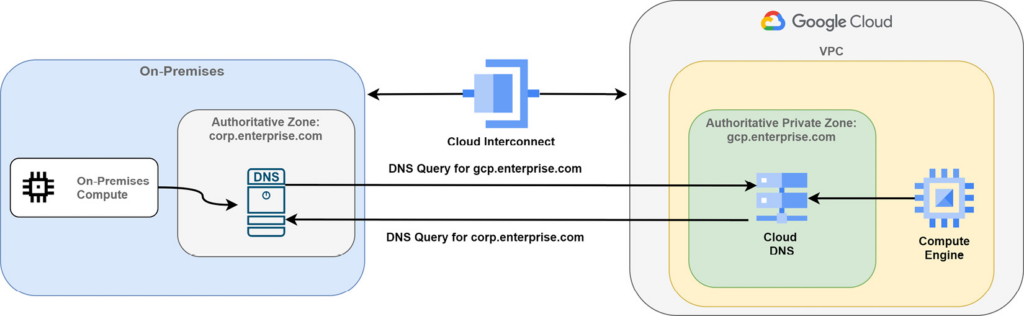

It is not always possible to completely migrate the DNS to the cloud, and DNS hybrid solutions must still be in place, especially for private scenarios.

Name pattern:

- Complete domain separation: This pattern uses a separate domain for your on-premises and cloud environment, such as corp.enterprise.com and gcp.enterprise.com. This pattern is the best one to choose because it makes it extremely easy to forward DNS requests between environments.

- Google Cloud domain as subdomain: This pattern uses a subdomain for all GCP resources, and they are subordinate to the on-premises domain. For instance, you could use corp.enterprise.com for on-premises resources and gcp.corp.enterprise.com for Google Cloud resources. This pattern applies when the resources on-premises are far greater than ones in GCP.

- On-prem domain as subdomain: This pattern uses a subdomain for all on-premises resources, and they are subordinate to the Google Cloud domain. For example, you could use corp.enterprise.com for Google Cloud resources and dc.corp.enterprise.com for on-premises resources. This pattern is not common, and it applies to companies that have a small number of services on-premises.

For hybrid environments, the recommendation for private DNS resolution is to use two separate authoritative DNS systems.

With a two-authoritative-DNS-systems hybrid architecture, it is required to configure forwarding zones and server policies

Important Note: To forward DNS requests between different Google VPCs, you need to use DNS peering regardless of the type of interconnection.

Forwarding zones can be used to query DNS records in your on-premises environment for your corporate resources. Thus, if you need to query resources in corp.enterprise.com, you need to set up a forwarding zone. This approach is preferred because it preserves access to GCE instances and public IP addresses are still resolved without passing through the on-premises DNS server.

Important Note: Cloud DNS uses the IP address range of 35.199.192.0/19 to send queries to your on-premises environment. Make sure that your inbound firewall policies of your DNS server accept this source range.

DNS server policies allow on-premises hosts to query DNS records in a GCP private DNS environment. Anytime an on-premises host sends a request to resolve some GCE instance name in gcp.enterprise.com, you need to create an inbound DNS forwarding rule in the DNS server policy.

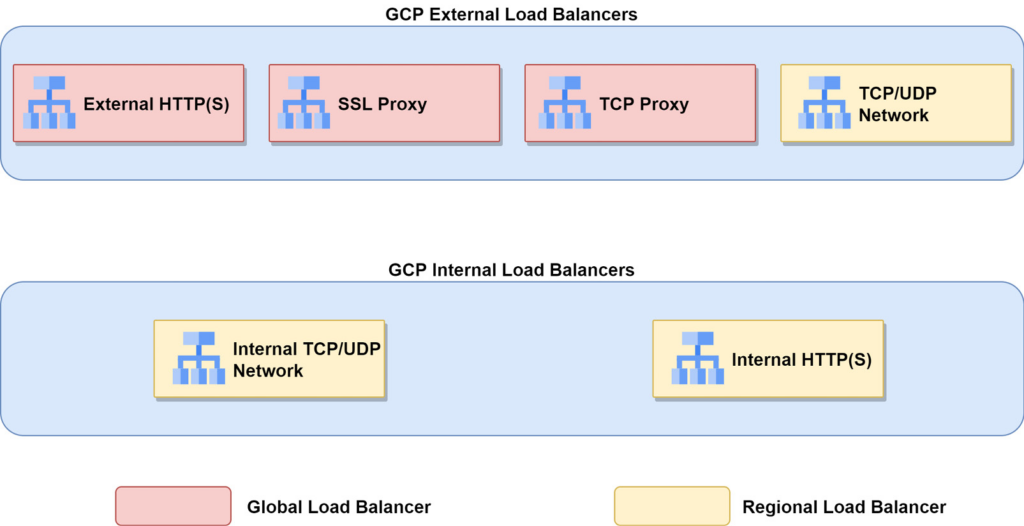

Global load balancers can be used when backends are distributed across multiple regions. Regional load balancers can be used when backends are in one region.

Improve user experience:

- Deploy backends in each GCP region where your users are. Route user requests to the nearest backends using a GCP global load balancer depending on your application. An HTTP(S) or SSL/TCP Proxy load balancer can do this automatically.

- If you are deploying global services, HTTP(S) or SSL/TCP Proxy load balancers should be used to minimize both latency and time to first byte (TTFB). Because these load-balancer services proxy the user traffic, this helps to reduce the effects of TCP slow start, reduces Transport Layer Security (TLS) handshakes for every SSL offloading session, and automatically upgrades user sessions to HTTP/2, reducing the number of packets transmitted.

- If your application used multiple tiers (that is, web frontend, application, and database), they should be deployed in the same GCP region. Avoid inter-regional traffic between application tiers. Google Cloud Trace can help you to find out applications’ interactions and allow you to decrease application latency.

- Integrate GCP Cloud CDN any time the user traffic is cacheable. This greatly reduces user latency because the content is served directly at the Google frontend.

- For static content, you should use Google Cloud Storage in combination with HTTP(S) load balancing and Cloud CDN to serve the content directly. This brings two benefits: it reduces the workload to the web server and reduces latency.

The best practices recommended by the Google Cloud security team can be listed as follows:

- Manage traffic with firewall rules: All the traffic to and from cloud assets should be controlled using firewall rules. GCE instances and GKE clusters should receive only the traffic they need to serve. Google recommends applying firewall rules to groups of VMs with service accounts or tags. This helps in better administering which traffic should be received by groups of VMs. For example, you can create a firewall rule that allows HTTPS traffic to all GCE instances that stay in a MIG by using a dedicated tag such as webserver.

- Limit external access: Avoid as much as possible exposing your GCE instances to the internet. If they need to connect to public services such as operating system updates, you should deploy Cloud NAT to let all the VMs in one region go out to reach external services. In cases where they just have to connect to GCP application programming interfaces (APIs) and services such as Google Cloud Storage, you can enable Google Private Access on the subnet and still maintain private IP addresses to the VMs.

- Centralize network control: In large GCP organizations, where resources need to communicate with each other privately and they are part of different projects, it is recommended to use one shared VPC under one network administration. This should be an Identity and Access Management (IAM) role with the least number of permissions that allow network administrators to configure subnets, routes, firewall rules, and so on for all the projects in the organization.

- Interconnect privately to your on-premises network: Based on your network bandwidth, latency, and service-level agreement (SLA) requirements, you should consider either Cloud Interconnect options (Dedicated or Partner) or Cloud VPN in order to avoid traversing the internet to connect your on-premises network. You should choose Cloud Interconnect when you require low-latency, highly scalable, and reliable connectivity for transferring sensitive data. On the other hand, you can use Cloud VPN to transfer data using IP Security (IPsec).

- Secure your application and data: Remember that securing your application and data in the cloud is a shared responsibility between Google Cloud and you. However, Google Cloud offers additional tools to help secure your application and data. Indeed, you can adopt VPC Service Controls to define a security perimeter to constrain data in a VPC and avoid data exfiltration. Additionally, you can add Google Cloud Armor to your HTTP(S) load balancer to defend your application from distributed denial-of-service (DDoS) attacks and untrusted IP addresses. Moreover, you can control who can access your application with Google Cloud Identity-Aware Proxy (IAP).

- Control access to resources: Prevent unwanted access to GCP resources by assigning IAM roles with the least privileged approach. Only the necessary access should be granted to your resources running in Google Cloud projects. The recommendation is to assign IAM roles to groups of accounts that share the same responsibilities instead of assigning them to individuals’ accounts. In cases where we have services that need access to other GCP services (a GCE instance connects to Google Cloud Storage), it is recommended to assign IAM roles to a service account.

In Custom mode, you must select a primary IP address range. VM instances, internal load balancers, and internal protocol forwarding addresses come from this range. You could also select a secondary IP address for your subnet. This secondary IP address could be used by your VMs to expose different services as well as Pods in GKE.

Your subnet can have primary and secondary CIDR ranges. This is useful if you want to maintain network separation between the VMs and the services running on them. One common use case is GKE. Indeed, worker Nodes get the IP address from the primary CIDR, while Pods have a separate CIDR range in the same subnet. This scenario is referred to as alias IPs in Google Cloud. Indeed, with alias IPs, you can configure multiple internal IP addresses in different CIDR ranges without having to define a separate network interface on the VM.

BGP is the most important (and only) routing protocol used nowadays on the internet to make every network aware of the direction to follow to reach any other network in the world. It is considered an exterior gateway protocol (EGP) because it is used mainly to exchange routing information among different autonomous systems (ASes)

Interior gateway protocols (IGPs), which, on the contrary, are used only internally in any AS, to get full IP reachability for all the prefixes. Some examples of IGPs are Open Shortest Path First (OSPF), Intermediate System to Intermediate System (IS-IS), and Enhanced Interior Gateway Routing Protocol (EIGRP). In GCP, we take care of BGP only.

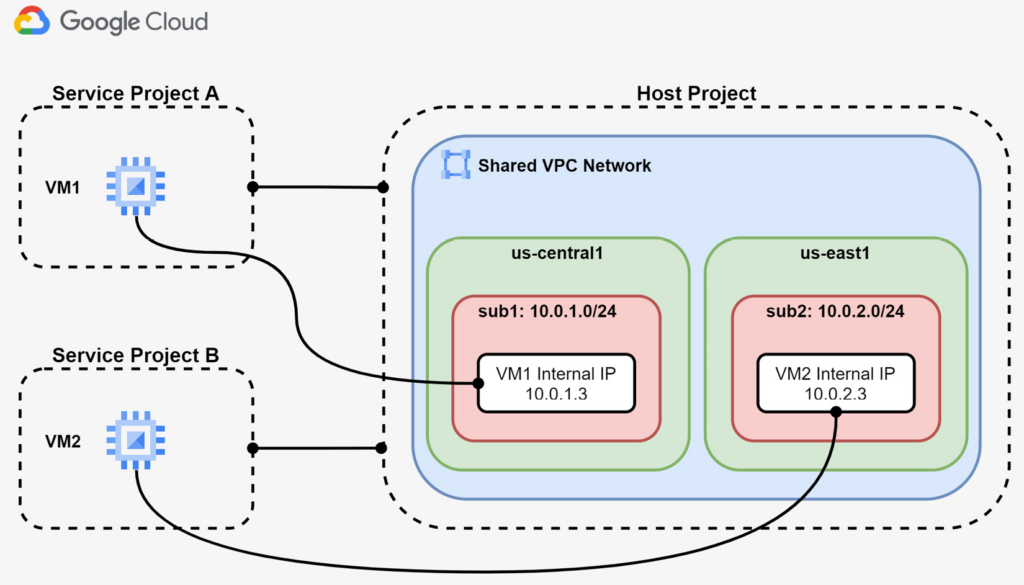

Shared VPC has the following components in its architecture:

- Host project: This is a project that contains one or more Shared VPC networks. It is created by the Shared VPC Admin and can have one or more service projects attached to it.

- Service project: This is any project that is attached to a host project. This can be done by the Shared VPC Admin. The service project belongs to only one host project.

The following diagram shows a basic example of a Shared VPC scenario:

As you can see from above, the host project has the VPC network that contains one subnet for each GCP region. Additionally, the host project has two service projects attached. You will note that service projects do not have any VPC network. However, they can deploy GCE instances that get private IP addresses from the region in which they run.

The communication between resources in service projects depends on the sharing policy adopted in the Shared VPC and on the firewall rules applied.

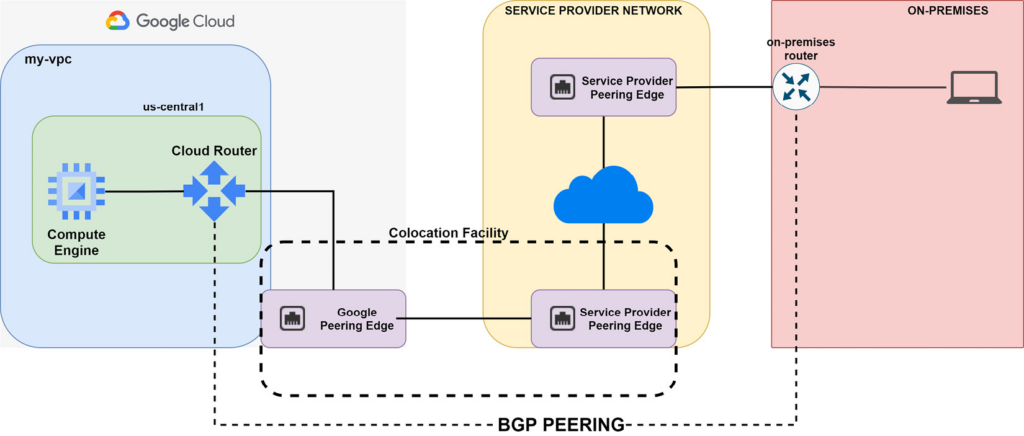

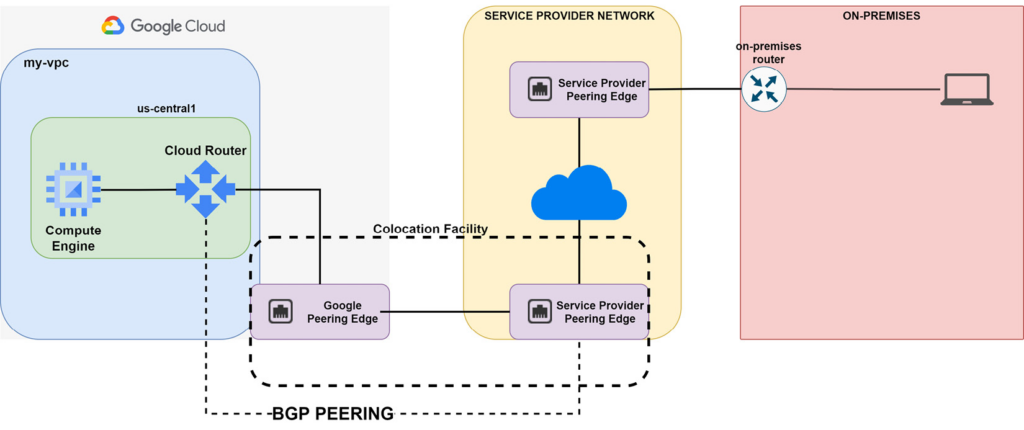

When your Cloud Interconnect design requires an SP to reach the Google Cloud network, you are configuring a Partner Interconnect solution. It comes with two connectivity options, as outlined here:

- Layer 2 connectivity: This emulates an Ethernet circuit across the SP network and thus allows your on-premises router to be virtually interconnected with the Google peering edge. With this solution, you are required to configure BGP peering between your on-premises router and Cloud Router in your VPC.

- Layer 3 connectivity: This provides IP connectivity between your on-premises router and Cloud Router in your VPC. With this option, you do not configure BGP peering with Cloud Router. Instead, the GCP partner SP establishes BGP peering with Cloud Router and extends the IP connectivity to your on-premises router. Since Cloud Router only supports BGP dynamic routing, you cannot use a static route in VPC pointing to the interconnect. Of course, the customer can configure static or dynamic routing in their on-premises router.

Layer 2 Partner Interconnect:

Layer 3 Partner Interconnect:

You can establish BGP peering with Google Cloud with two peering options, as follows:

- Direct Peering: This is a co-located option that requires you to have your physical device attached to a Google edge device available at a private co-location facility.

- Carrier Peering: This option allows you to establish BGP peering with Google even though you are not co-located in one of the Google PoPs. You can achieve this through a supported SP that provides IP connectivity to the Google network.

Cloud Router is a regional service, and therefore when created, it is deployed in one region in your VPC. You can have two different types of dynamic routing mode in your VPC that influences Cloud Router advertising behavior, outlined as follows:

- Regional dynamic routing: Cloud Router knows only the subnets attached to the region in which it is deployed. In this mode, by default Cloud Router advertises only the subnets that reside within its region. The routes learned by Cloud Router only apply to the subnets in the same region as the Cloud Router.

- Global dynamic routing: Cloud Router knows all the subnets that belong to the VPC in which it is deployed. Therefore, by default Cloud Router advertises all the subnets of the VPC. Routes learned by the Cloud Router apply to all subnets in all regions in the VPC.

In both cases, Cloud Router advertises all the routes it knows. This is the default behavior when BGP is used as a routing protocol. If you need to control which routes should be advertised to the Cloud Router peers, you need to configure custom route advertisements. Indeed, you can create filters that apply to which prefixes should be advertised via BGP to the Cloud Router peers. More specifically, custom route advertisements are a route policy that is applied to all BGP sessions or per individual BGP peer that Cloud Router has.

When your design includes global dynamic routing (that is, BGP) and multiple Cloud Router services in various regions, you must decide how to handle inbound and outbound routing within your VPC. In Google Cloud, this can be achieved using two methods, as outlined next:

Cloud Router does not support other BGP attributes apart from AS_PATH and MED. For example, you cannot configure Local Preference or Community. AS_PATH only works with one Cloud Router that has multiple BGP sessions—for example, AS_PATH will not be used across two Cloud Router instances in a scenario where we have two Dedicated or Partner Interconnect connections where their VLAN attachments are attached to separate Cloud Router instances.

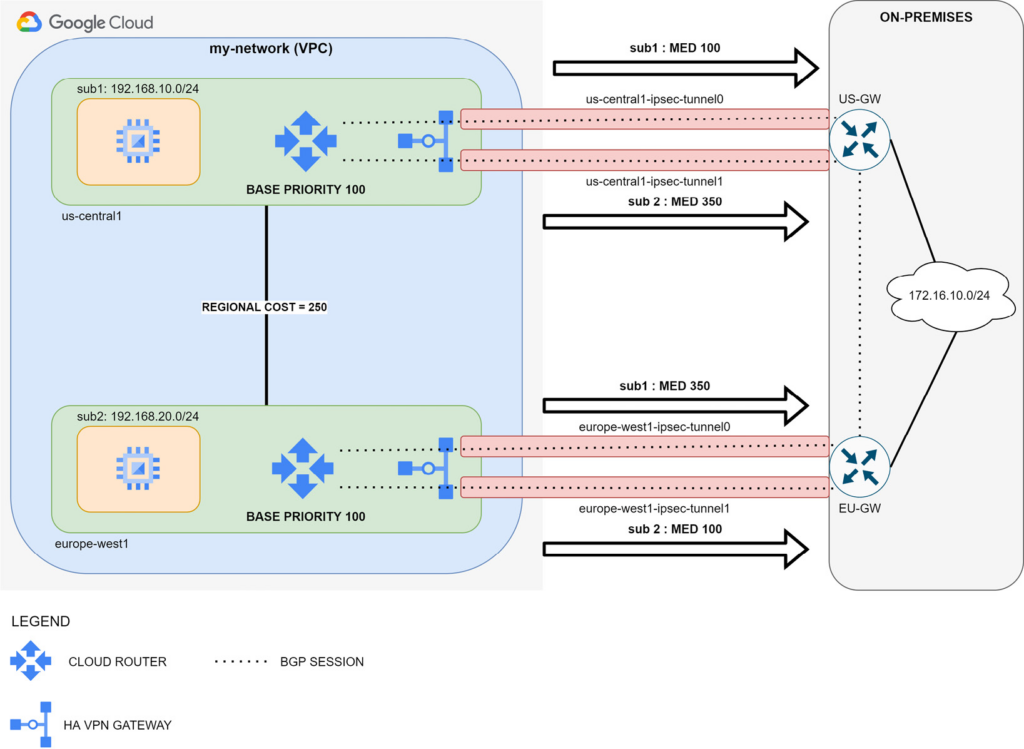

Each Cloud Router instance advertises VPC subnets with different MED values in order to communicate how the traffic from the on-premises network should enter the VPC. More specifically, you will notice that each Cloud Router instance announces VPC subnets with different MED values. This is because Cloud Router takes the base priority and adds the regional cost to the MED value. This happens when Cloud Router advertises subnet prefixes from other regions.

In the example shown in the preceding diagram, traffic from the 172.16.10.0/24 network will use US-GW to reach sub1 because US-GW‘s best BGP route is the one with the lowest MED (that is, 100). On the contrary, traffic designated to sub2 will use EU-GW for the same reason.

Regional Cost is a system-defined parameter set by Google Cloud and it is out of your control. It depends on latency, the distance measured between two regions, and it ranges from 201 to 9999.

Base Priority is a parameter that you choose to provide priority on your routes. Make sure it ranges from 0 to 200 in order to guarantee that it will always be lower than regional costs. This will avoid having a strange routing within your VPC.

In order to maintain symmetric routing in your design, you must advertise your on-premises subnets using MED. Google Cloud Router accepts AS_PATH PREPEND when there are multiple BGP sessions terminated on the same Cloud Router. When you have different Cloud Routers, only MED is used in evaluating routing decisions. The recommendation is to maintain the same approach you choose with Cloud Router to have a simpler design.

Designing failover and DR with Cloud Router and Dedicated Interconnect

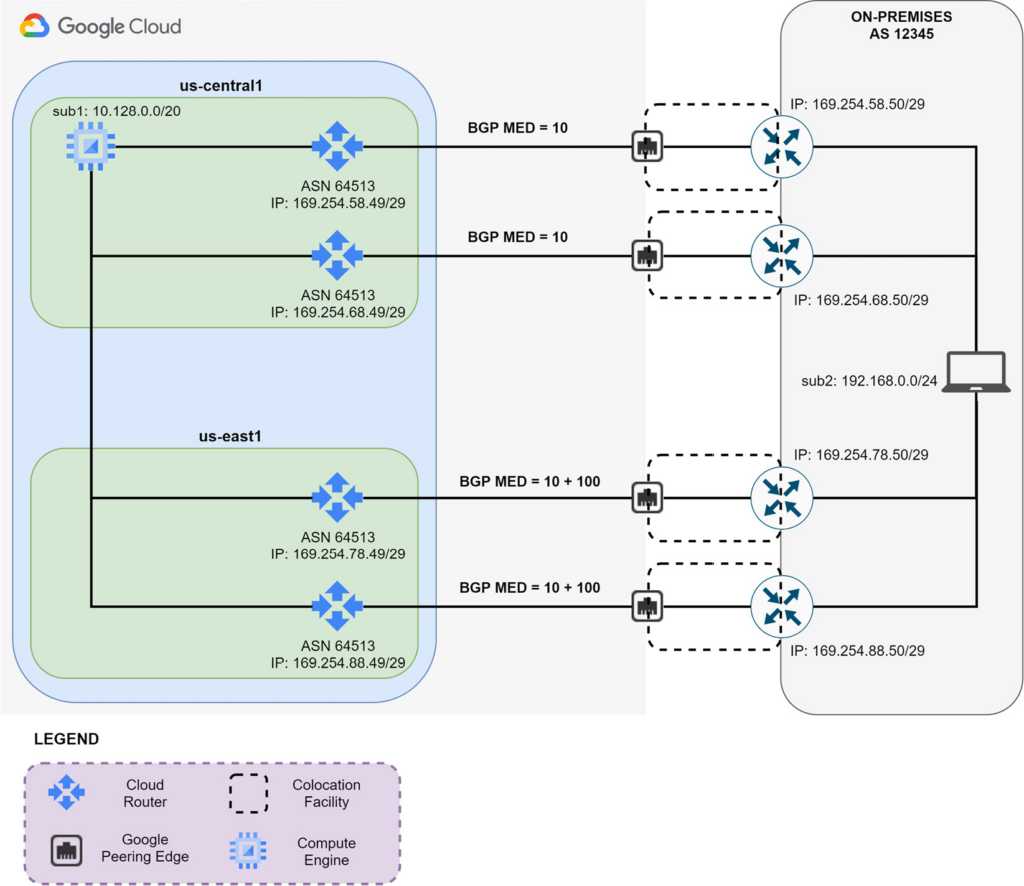

When you are designing hybrid Cloud interconnects for production and mission-critical applications that have a low tolerance to downtime, you should build an HA architecture that includes the following:

- At least four Dedicated Interconnection connections, two for each metropolitan area. In each metro area, you should use separate Google edge availability domains that are connected to the VPC via separate VLAN attachments.

- At least four Cloud Routers, two in each GCP rregion. Do this even if you have a GCE instance running on one single region.

- Global dynamic routing mode must be set within the VPC.

- Use two gateways in your on-premises network per each GCP region you are connecting with.

As shown above, cloud routers exchange subnet prefixes with the on-premises routers using BGP. Indeed, in the DR design, you must use four BGP sessions, one for each VLAN attachment that you have in your co-location facility. Using BGP, you can exchange prefixes with different metrics (that is, MED). In this way, you can design an active/backup connectivity between sub1 (10.128.0.0/20) in your VPC and sub2 (192.168.0.0/24) in your on-premises network. Indeed, setting a base priority of 100 to sub1 in all your Cloud Routers allows you to have us-central1 Cloud Routers advertise two routes with lower MED values. On the contrary, us-east1 Cloud Routers will advertise two routes with higher MED values because they add to the base priority the regional cost between us-central1 and us-east1. In this manner, the on-premises routers will have two active routes toward the us-central1 Cloud Routers and two backup routes toward the us-east1 Cloud Routers. In case both Dedicated Interconnect links fail in the us-central1 region, BGP will reroute the traffic activating the path via us-east1. In this manner, you can design 99.99% availability interconnection between your VPC and on-premises network.

In order to maintain symmetric traffic between your VPC network and your on-premises network, you must advertise different BGP MED values from your on-premises routers. This will ensure that the incoming traffic to sub2 (192.168.0.0/24) will use the appropriate Dedicated Interconnect links.