In Google Cloud, a subnet is a regional resource that has a defined range of IP addresses associated with it.

Two VMs in the same zone and on the same network communicate for free, but machines in different zones, even if those zones are in the same region, are charged a network egress fee. If said machines are in different regions, then they are charged a fee, depending on the source and destination locations. Data downloaded out of the cloud to external systems, no matter which resource it comes from, also costs money. Don’t design a network without thinking about the cost ramifications for said design.

If two Compute Engine machines aren’t on the same network, and there’s no special connection between the two different networks (peering, VPN, Multi-NIC , and so on), then the only way they can communicate is using publicly routable external IPs. The packets would leave machine one’s VPC, head out into the Google Cloud edge network, and then run through Google’s edge network security, back down into the second VPC, and then to its VM. That trip up and back, into and out of Google’s edge network, adds overhead, latency, and cost.

Private Google Access is a subnet-level feature that allows resources with no public IP to access Google Cloud resources without sending traffic through Google’s edge network. If you have a VPN or Interconnect to aid with your hybrid cloud configuration, then private access can be extended to on-premises workloads, but you will need to configure on-premises DNS to route all private.googleapis.com and restricted.googleapis.com traffic to 199.36.153.8/30. Though that’s a public IP, it’s not announced over the internet, so the on-premises traffic will route the request over your hybrid cloud connection.

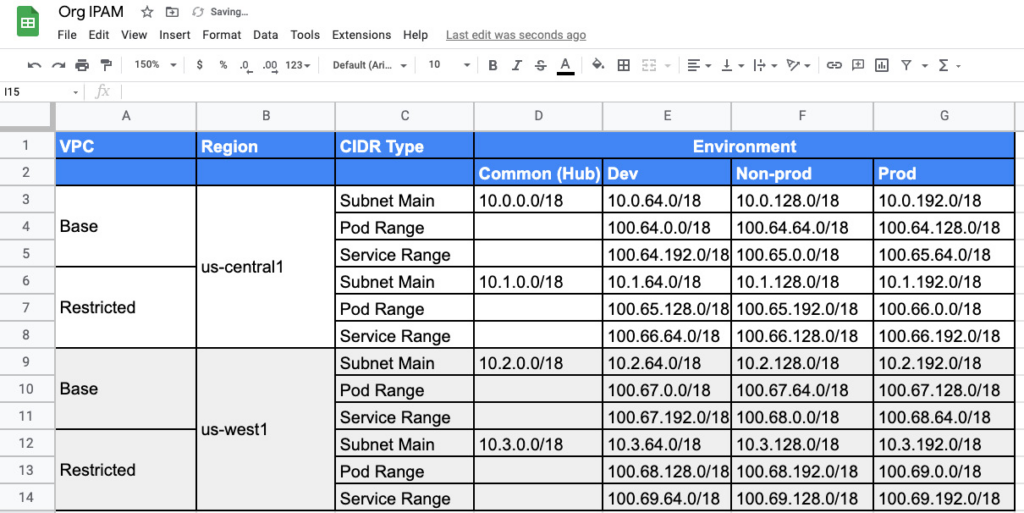

IPAM: (base range : 10.0.0.0/8, subnet size /18)

Google Kubernetes Engine (GKE) additionally adopts a flat network structure for all clusters in a VPC, which means that each Pod in each cluster has its own IP in the VPC and can communicate with Pods in other clusters directly (without needing NAT), a useful property which enables advanced features like container-native load balancing.

The Internet Engineering Task Force (IETF) has defined three IP address ranges as private addresses.

- 10.0.0.0/8: 10.0.0.0 to 10.255.255.255

- 172.16.0.0/12: 172.16.0.0 to 172.31.255.255

- 192.168.0.0/16: 192.168.0.0 to 192.168.255.255

The 10.0.0.0/8 range has 16,777,216 addresses, the 172.16.0.0/12 range has 1,048,576 addresses, and the 192.168.0.0/1 range has 65,536 addresses.