When you deal with large workloads, Google Cloud recommends regional GKE clusters with the VPC-native networking mode. This allows VPC subnets to have a secondary range of IP addresses for all Pods running in the cluster. With VPC-native mode, routing traffic to Pods is automatically achieved without adding any custom routes to the VPC.

Designing scalable clusters sometimes requires security as well. In regular GKE clusters, all worker Nodes have a private and a public IP address assigned. Unless you have a specific reason to publish your worker Nodes on the internet, Google Cloud recommends using a private cluster instead. Here, worker Nodes only have private IP addresses and thus are isolated from the internet.

Service discovery in GKE is provided by kube-dns, which is the principal component of DNS resolution of running Pods. When the workload increases, GKE automatically scales this component. However, for very large clusters, this is not enough. Therefore, Google Cloud’s recommendation for high DNS scalability is to distribute DNS queries to each local Node. This can be implemented by enabling NodeLocal DNSCache. This caching mechanism allows any worker Nodes to answer queries locally and thus provide faster response times.

Planning the IP address should follow these recommendations:

- The Kubernetes control-plane IP address range should be a private range (RFC 1918) and should not overlap with any other subnets within the VPC.

- The Node subnet should accommodate the maximum number of Nodes expected in the cluster. A cluster autoscaler can be used to limit the maximum number of Nodes in the cluster pool.

Pods and service IPs should be implemented as secondary ranges of your Node subnet, using alias IP addresses in VPC-native clusters. You should follow these recommendations:

- When creating GKE subnets, it is recommended to use custom subnet mode to allow flexibility in CIDR design.

- The subnet Pod should be dimensioned on the maximum number of Pods per Node. By default, GKE allocates a /24 range per Node, which allows 110 maximum Pods per Node. If you are planning to have fewer Pods than this, resize the CIDR accordingly.

- Avoid IP addresses overlapping between GKE subnets and on-premises subnets. Google recommends using the 100.64.0.0/10 (RFC 6598) range, which avoids interoperability issues.

- If you are exposing GKE services within your VPC, Google Cloud recommends using a separate subnet for internal TCP/UDP load balancing. This also allows you to improve security on the traffic to and from your GKE services.

Important Note

If you decide to use an Autopilot cluster (that is, GKE in auto mode) remember that the maximum number of Pods per Node is 32, with a /26 range for each Node subnet. These settings cannot be changed.

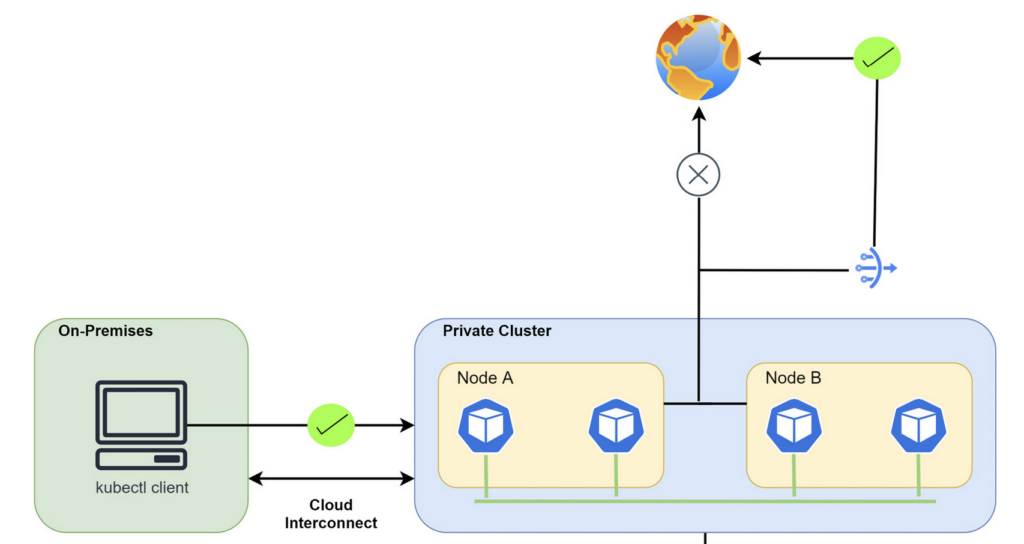

When designing network security in GKE, the recommendation is to choose a private cluster and control access to it with authorized networks. Indeed, you can choose which IP addresses (both private and public) can manage your cluster, and this improves security for your GKE cluster. With a private cluster, all the IP ranges are private, but you can access the API server via the public IP. Moreover, you are recommended to use Cloud NAT to let the worker Nodes access the internet and to enable Private Google Access to let worker Nodes privately reach Google services.

In a private cluster, worker Nodes have private IP addresses only. If they need to reach the internet to download upgrades or system patches, they must use Cloud NAT. Pods communicate internally through the dedicated secondary subnet.